Unified Solutions for Enterprise AI

ThunderCat Technology delivers integrated AI infrastructure solutions powered by NVIDIA’s DGX platform. These solutions combine compute, networking, storage, software, and lifecycle services into a cohesive system optimized for enterprise needs.

Key Offerings

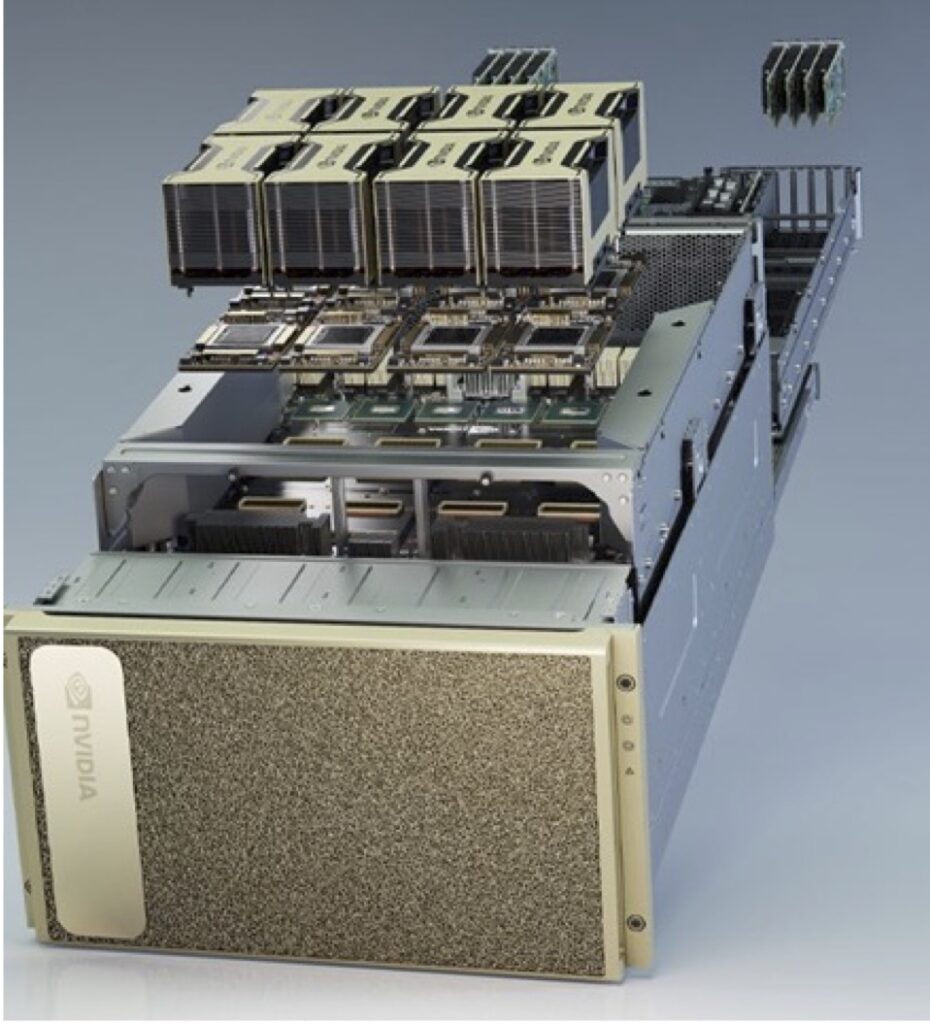

NVIDIA DGX

NVIDIA DGX systems are purpose-built for enterprise AI, combining state-of-the-art GPUs with an optimized software stack to deliver unmatched performance for AI and deep learning workloads.

Details on the NVIDIA DGX Platform

NVIDIA DGX SuperPOD

A turnkey data center solution designed for large-scale AI workloads. It includes:

-

- High-performance NVIDIA DGX Systems.

- Ultra-low latency networking using NVIDIA InfiniBand.

- Certified storage optimized for diverse data types.

- White-glove deployment services ensuring seamless implementation.

Details on the NVIDIA SuperPod

NVIDIA DGX BasePOD

A reference architecture that integrates compute, networking, storage, and software into scalable enterprise infrastructure.

Details on the NVIDIA BasePODLifecycle Services:

- Capacity planning and site evaluation.

- Installation and post-deployment optimization.

- Ongoing training and support through NVIDIA’s Deep Learning Institute and access to DGXperts.

NVIDIA DGX Cloud

NVIDIA DGX Cloud provides instant access to AI supercomputing through a browser-based interface. This service allows enterprises to scale their AI development without the need for on-premises infrastructure. Key features include:

- Dedicated clusters of NVIDIA DGX hardware

- Integrated software stack: Includes NVIDIA Base Command Platform and NVIDIA AI Enterprise for streamlined development workflows.

- Flexible hybrid-cloud support: Scale workloads seamlessly across cloud and on-prem environments.

- Expert support: Access to NVIDIA engineers for optimization and troubleshooting.

- DGX Cloud eliminates infrastructure complexity, enabling faster time-to-value for generative AI, large language models (LLMs), and other advanced applications.

Details on the NVIDIA DGX Cloud

Base Command – Run:AI

NVIDIA Base Command powers the management of DGX systems, simplifying AI development and deployment through:

- Cluster management: Centralized tools for provisioning, monitoring, and maintaining DGX clusters.

- Workload orchestration: Efficient scheduling of jobs across single or multi-node setups using Kubernetes or Slurm.

- Run:AI integration: Enhances resource allocation by virtualizing GPU resources across teams, ensuring optimal utilization.

- Comprehensive reporting: Real-time insights into system performance, resource usage, and job status.

Base Command paired with Run:AI enables organizations to maximize their DGX infrastructure’s potential while streamlining MLOps workflows.